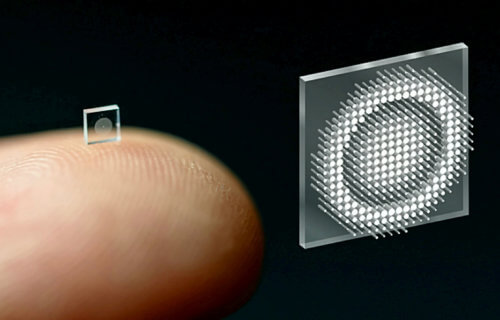

PRINCETON, N.J. — The world’s smallest camera, the size of a grain of salt, may soon be coming to mobile phones everywhere. Computer scientists from Princeton University and the University of Washington say the small device they created can take crisp, full-color pictures just as well as conventional cameras which are 500,000 times bigger.

The new technology may help doctors to diagnose and treat diseases far less invasively than traditional endoscopy can today. It will also make imaging better, as thousands of tiny devices could cover the whole surface of a smartphone to become one giant camera — instead of mobile devices needing three different cameras to take pictures.

Traditional cameras use several curved glass or plastic lenses to bend light rays into focus, but the new device uses a “metasurface” which developers can make just like a computer chip. The metasurface is just half a millimeter wide and is made up of 1.6 million tiny posts, which are all shaped like cylinders but none of them look exactly the same.

When the antennae-like tiny posts interact with light, with the help of algorithms, they produce better pictures and capture a wider frame of view than any full-color metasurface camera created so far. The metasurfaces are made from silicon nitride, a glass-like material which can be manufactured easily and produced more cheaply than lenses in conventional cameras.

Older metasurfaces took poor pictures

Senior study author Dr. Felix Heide notes that a key innovation during the camera’s development was figuring out how to combine the design of the optical surface with the signal processing algorithms that make an image.

The team adds that this integrated design boosted the camera’s performance in natural light. Previous metasurface cameras have required the pure laser light of a laboratory or other ideal conditions in order to create high-quality images.

When the researchers compared their innovation with other types of metasurface cameras, they found the other models produced blurry pictures, only captured small fields of light, and could not capture the full spectrum of visible light.

“It’s been a challenge to design and configure these little nano-structures to do what you want,” says study co-leader Ethan Tseng, a computer science Ph.D. student at Princeton, in a university release. “For this specific task of capturing large field of view RGB images, it was previously unclear how to co-design the millions of nano-structures together with post-processing algorithms.”

The next generation of optical technology

Study co-lead author Dr. Shane Colburn tackled this challenge by making a computer simulator to test different types of tiny antennae. While this type of simulation can use “massive amounts of memory and time” because of the huge number of antennae and the complexity of their interactions with light, Dr. Colburn made a model that could predict how well the metasurfaces could make images with sufficient accuracy.

“Although the approach to optical design is not new, this is the first system that uses a surface optical technology in the front end and neural-based processing in the back,” says Joseph Mait, chief scientist at the U.S. Army Laboratory, who did not take part in the study.

“The significance of the published work is completing the Herculean task to jointly design the size, shape and location of the metasurface’s million features and the parameters of the post-detection processing to achieve the desired imaging performance.”

The researchers say they now want to create cameras that are even better at detecting objects that could help with medicine and robotics.

The findings appear in the journal Nature Communications.

The National Science Foundation, the U.S. Department of Defense, the UW Reality Lab, Facebook, Google, Futurewei Technologies, and Amazon all supported this project.

South West News Service writer Gwyn Wright contributed to this report.